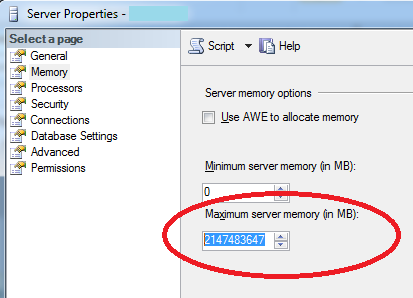

I ran into a scenario this week where a runaway query on a 1.4 million record database caused SQL Server’s memory usage to balloon from 150MBs to 4GBs in just a few minutes. I was surprised that SQL Server let the process consume that much of the system resources. So after a couple minutes digging I discovered that the default maximum server memory setting for SQL Server 2008 is 2147483647 MBs, or a ridiculous 2048 Terabytes. You’ve gotta be kidding me!

Now granted it’s rare to have a runaway query like that, but I don’t understand why the max memory setting is left wide open by default. Why doesn’t the installer automatically figure out the default setting based on available RAM, and adjust it accordingly based on some algorithm? Maybe there’s a good reason for this, but on the surface it sounds like a serious oversight.

Solution

So, for the purpose of our needs I cranked down the maximum server memory setting to 50% of existing RAM using SQL Server Management Studio. Your needs may be different and I recommend you read Microsoft’s documentation carefully, the link is included below. It’s been a long time since I’ve had a corrupted database, and running out of system memory seemed like an almost guaranteed way to experience that.

Reference: